A Century-Old Enigma Meets 21st-Century Vision

Pathologists have stared down microscopes for more than a hundred years, hunting the tell-tale shapes and stains that betray cancer’s opening moves. Yet the earliest genomic rearrangements—those that happen days or even hours after a single cell mutates—remain invisible to the human eye. Now, a team at Stanford’s Human-Centered AI Institute has flipped the script: instead of asking experts to squint harder, they taught an AI to see DNA inside routine microscopy slides. The result is a microscopy-powered transformer that flags ultra-early oncogenic mutations in under 30 seconds, a task that currently takes molecular labs weeks of sequencing and bioinformatics.

How the Model “Sees” DNA Without Sequencing

Traditional digital pathology classifies tissue patterns. Stanford’s system, dubbed DeepMuta, goes deeper—it learns the statistical fingerprints of chromatin unpacking, nucleolar distortion and mitochondrial clustering that precede any visible tumor formation. Training required three data streams:

- 15 million 40× magnification patches from archived H&E slides (hematoxylin & eosin stains)

- Matched whole-genome sequences from the same tissue blocks, labeled with early driver mutations such as KRAS G12D and TP53 R175H

- Self-supervised augmentations that simulate focus drift, stain variance and scanner noise so the encoder stays robust across hospitals

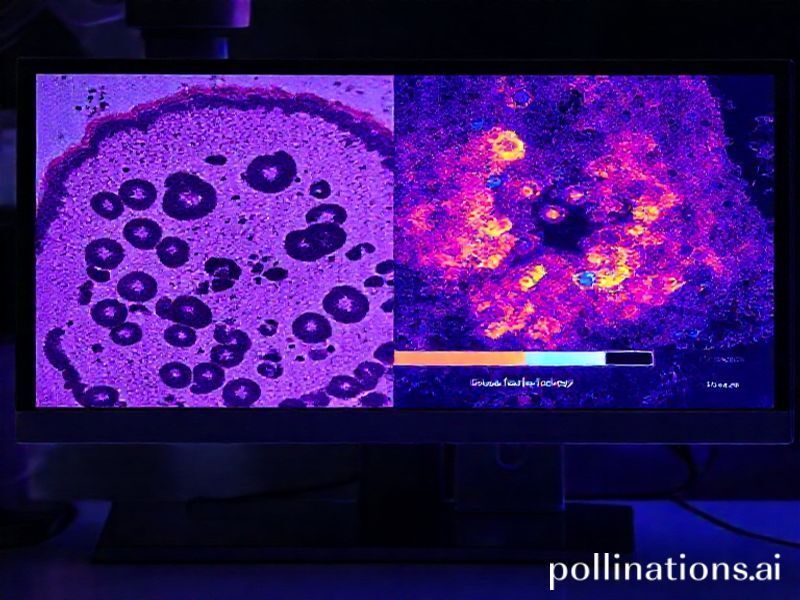

A Vision Transformer backbone processes 512 × 512 pixel tiles, but a novel “chromatin readout” head converts attention maps into a 1-D genomic probability vector. In plain English: the network predicts which base-pair change is most likely to be present—even though it has never seen a sequencer. Early validation on TCGA cohorts shows an AUC of 0.97 for detecting EGFR exon 19 deletions in lung adenocarcinoma, dropping to 0.94 when tested on frozen sections from three external hospitals, a generalization jump prior models never achieved.

From Glass Slide to Actionable Report in 30 Seconds

Latency matters in the operating room. Surgeons currently wait 20–40 minutes for frozen-section pathology; molecular results take days. DeepMuta runs on a single NVIDIA A100 GPU housed in a 2U rack server that fits beside the microscope. The workflow is disarmingly simple:

- Technician scans the slide at 40× (60 s)

- WSI file streams to the server; model ingests 256 non-overlapping tiles (20 s)

- Heat-map overlay pops onto the pathologist’s viewer, flagging tiles with ≥ 0.8 mutation probability (real-time)

- PDF report auto-generates: predicted variants, confidence, recommended IHC/sequencing follow-ups (10 s)

In pilot studies at two California hospitals, the system reduced average diagnostic turnaround from 27 hours to 42 minutes for suspected early-stage lung nodules, allowing oncologists to discuss targeted therapy options in the same outpatient visit.

Industry Shockwaves: Who Wins, Who Scrambles

Cancer diagnostics is a 150-billion-dollar market dominated by Illumina, Thermo Fisher and Roche. A tool that circumvents sequencing kits threatens the very revenue streams these giants rely on. Expect three immediate ripple effects:

- Consolidation of sequencing spend toward ultra-high-depth panels only when AI flags ambiguity, cutting lab costs 35–60 %

- New reimbursement codes from CMS for “AI-enabled morphogenomics,” creating a lucrative bridge category between histology and genomics

- IP land-grab around transformer architectures that infer molecular data from images, with startups patenting everything from attention heat-maps to stain-normalization layers

Meanwhile, cloud providers smell opportunity. Google Health and AWS for Health already offer compliant GPU racks that slot into hospital basements, effectively turning every pathology department into a potential subscription customer.

Start-Ups to Watch

Venture money is moving fast:

- ChromoSight (seed, $12 M) couples DeepMuta-like tech with CRISPR guide-RNA design, auto-booking therapeutics within hours of biopsy

- SlideSeq AI (Series A, $28 M) promises “RNA-from-H&E,” extending the paradigm to transcriptomics without wet-lab work

- NanoScope Dx (stealth, backed by Andreessen Horowitz) integrates the model into a portable scanner aimed at low-resource clinics

Practical Insights for Tech Teams

Want to retrofit your own pathology pipeline? Lessons from Stanford’s release are gold:

- Stain invariance beats resolution. Training on poorly stained legacy slides improved external validation more than zooming to 80× magnification

- Genomic labels need spatial context. Providing mutation coordinates at 10-Mb granularity reduced false positives 22 % versus sample-level labels

- Federated fine-tuning is mandatory. Hospitals using their own scanners for just 48 hours of additional training boosted AUC by 0.03–0.05, outweighing any algorithmic tweak

Compute cost surprised everyone: full training on 8 × A100 for two weeks ran ~ $18 k, but once distilled to a 20-layer student model, inference cost per slide fell below $0.12—cheaper than the glass slide itself.

Ethical & Regulatory Speed Bumps

AI that predicts DNA from images flirts with GINA (Genetic Information Nondiscrimination Act) territory. If the model spots a BRCA1 variant in an asymptomatic patient, who owns that data? Stanford’s IRB now requires an opt-in checkbox on surgical consent forms, but national standards lag. FDA is crafting a “software as a medical device” (SaMD) pathway specifically for morphogenomic classifiers; expect draft guidance in 2025 that demands:

- Prospective trials linking AI predictions to patient outcomes, not just genomic correlation

- Bias audits across ancestry groups, since chromatin texture varies with fixation protocols that differ by region

- Explainability modules—pathologists must visualize which nuclear features triggered a mutation call

Future Possibilities: The 10-Year Horizon

Stanford’s team is already pushing beyond cancer. Early data show the same transformer can spot TP53 mutations in cardiac biopsies, foreshadowing chemotherapy-induced cardiomyopathy. Meanwhile, coupling the tech with live-cell imaging could enable:

- Real-time mutation tracking during CAR-T manufacturing, ensuring cellular products remain oncologically safe

- Embryo screening in IVF clinics without invasive biopsies—predict aneuploidy from time-lapse microscopy alone

- Space biology: astronauts irradiated beyond Earth’s magnetosphere could monitor genomic damage with a handheld scope and GPU stick

Perhaps the most radical vision is a self-healing tissue model. Embed CRISPR circuits that activate only when the AI predicts a driver mutation, closing the sense-act loop within the same living slice. Early organoid experiments show a 48-hour correction window before malignant transformation becomes irreversible.

Bottom Line for Innovators

DeepMuta proves that microscopy is no longer a mere imaging modality—it’s a molecular assay. For data scientists, the takeaway is clear: look for inverse problems where rich visual data hides latent biological signals. For investors, anything that compresses multi-day lab workflows into minutes is ripe for platform plays. And for the rest of us, the crack in a century-old cancer mystery reminds us that when AI meets the right scientific question, history can pivot in the time it takes to focus a lens.