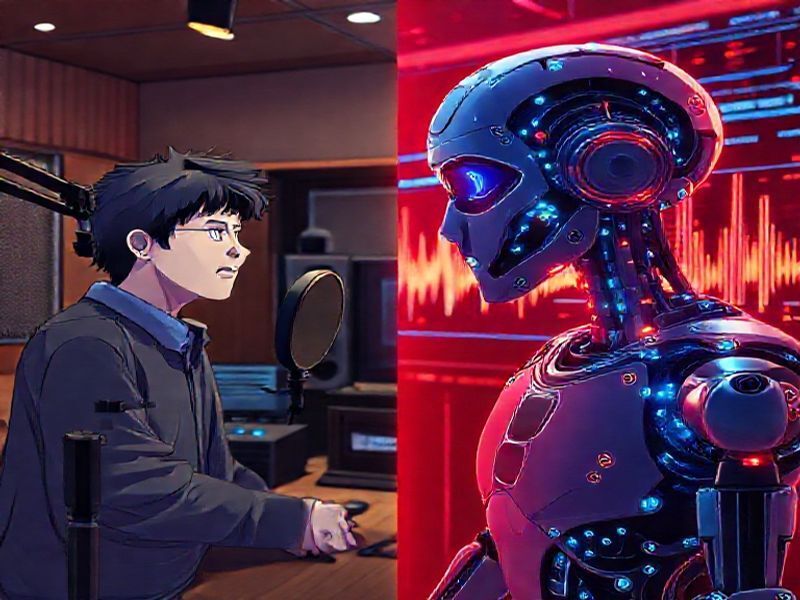

Amazon’s AI Anime Dubbing Debacle: When Innovation Meets Fan Expectations

In a stunning reversal that highlights the growing pains of AI-generated content, Amazon Prime Video has abruptly pulled its experimental AI-dubbed anime series following intense backlash from fans and critics. The streaming giant’s ambitious project to use generative AI for Japanese-to-English voice dubbing has become a cautionary tale about the limits of current AI technology in creative industries.

The Promise That Fell Flat

Amazon’s foray into AI-powered dubbing seemed like a logical step forward. With the global anime market valued at over $28 billion and growing at 9.5% annually, the company saw an opportunity to dramatically reduce localization costs while accelerating content delivery. Traditional anime dubbing can take weeks or months, involving voice actors, directors, and extensive post-production work.

The AI system, developed by Amazon’s Machine Learning Solutions Lab, promised to:

- Generate natural-sounding English voices from Japanese source material

- Maintain emotional context and character personality

- Reduce localization time by up to 70%

- Enable same-day releases for international audiences

However, the reality proved far different from the promise.

The Technical Breakdown: What Went Wrong

Voice Synthesis Limitations

The AI system, while technologically impressive, suffered from fundamental flaws that became immediately apparent to viewers. The generated voices lacked the nuance and emotional depth that fans expect from professional voice acting. Characters sounded monotone during dramatic scenes, emotional peaks felt flat, and comedic timing was completely off.

Technical analysis revealed several critical issues:

- Prosody Problems: The AI struggled with intonation patterns, creating unnatural speech rhythms

- Context Blindness: Emotional context wasn’t properly mapped to voice modulation

- Cultural Translation Gaps: Japanese cultural expressions lost meaning in direct AI translation

- Lip-Sync Issues: Timing mismatches disrupted the viewing experience

Machine Learning Model Shortcomings

Amazon’s system utilized a sophisticated neural network trained on thousands of hours of dubbed anime content. However, the training data proved insufficient for capturing the artistry of voice acting. Unlike human actors who understand character motivations and story arcs, the AI processed dialogue as isolated text strings.

The model architecture combined:

- Transformer-based language translation networks

- Generative adversarial networks (GANs) for voice synthesis

- Prosody prediction algorithms

- Real-time audio processing pipelines

Despite this technical sophistication, the system failed to replicate the human touch that makes anime dubbing an art form.

Industry Implications and Lessons Learned

The Human Element in Creative AI

Amazon’s failure underscores a crucial lesson for the AI industry: creative fields require more than just technical accuracy. Voice acting involves understanding subtext, cultural nuances, and emotional intelligence—areas where current AI still falls short.

The backlash revealed deeper concerns about AI replacing human creatives. Professional voice actors, represented by the Screen Actors Guild, viewed the technology as an existential threat. The controversy sparked debates about:

- Job displacement in creative industries

- The value of human artistic interpretation

- Quality standards for AI-generated content

- Consumer acceptance of synthetic media

Market Response and Competitor Strategies

Amazon’s competitors watched closely, learning valuable lessons. Netflix, which has invested heavily in anime content, doubled down on human-led localization while exploring AI as a supplementary tool rather than replacement. Crunchyroll emphasized its commitment to professional voice actors, seeing the controversy as a competitive advantage.

Industry analysts predict this setback will:

- Slow AI adoption in creative content production

- Increase investment in human-AI collaboration tools

- Establish new quality benchmarks for synthetic media

- Drive demand for more sophisticated creative AI solutions

Future Possibilities: Where AI Dubbing Goes From Here

Emerging Technologies and Approaches

Despite the current failure, AI dubbing technology continues evolving rapidly. Several promising approaches could address the shortcomings that doomed Amazon’s project:

Hybrid Human-AI Systems: Rather than full automation, future systems might use AI for initial translation and timing, with human actors providing emotional performance and quality control.

Emotion-Aware Models: Next-generation AI could incorporate emotional intelligence, understanding character arcs and contextual emotions to deliver more natural performances.

Personalized Dubbing: AI could generate multiple voice options, allowing viewers to choose their preferred style or even customize character voices.

Technical Innovations on the Horizon

Research labs worldwide are developing solutions to current limitations:

- Multimodal Learning: Systems that analyze visual cues, script context, and audio simultaneously

- Transfer Learning: Models that learn from specific voice actors to maintain consistency

- Real-time Adaptation: AI that adjusts performance based on viewer feedback

- Cultural AI: Systems trained on cultural context and idiomatic expressions

The Road Ahead: Balancing Innovation and Authenticity

Realistic Timelines and Expectations

Experts predict that truly convincing AI dubbing remains 5-10 years away. The technology needs fundamental breakthroughs in understanding human emotion, cultural context, and artistic interpretation. However, incremental improvements could make AI a valuable tool for specific use cases.

Short-term applications might include:

- Quick preview dubs for content evaluation

- Accessibility features for hearing-impaired viewers

- Low-budget or educational content

- Background character voices in games

Building Trust and Acceptance

For AI dubbing to succeed, companies must rebuild trust with both creators and consumers. This requires:

- Transparent communication about AI involvement

- Clear quality standards and testing protocols

- Respect for human creative contributions

- Gradual introduction with opt-in choices

Amazon’s experience serves as a valuable lesson: innovation without consideration for human creativity and consumer expectations leads to failure. The future of AI in creative industries lies not in replacement but in augmentation—enhancing human capabilities rather than substituting them.

As the technology matures, successful implementations will likely emerge from companies that respect both the technical possibilities and the irreplaceable value of human creativity. The anime industry’s passionate fanbase has spoken clearly: quality and authenticity matter more than convenience or cost savings.