When Gravity Wins: The Optimus Stumble Heard ‘Round the Tech World

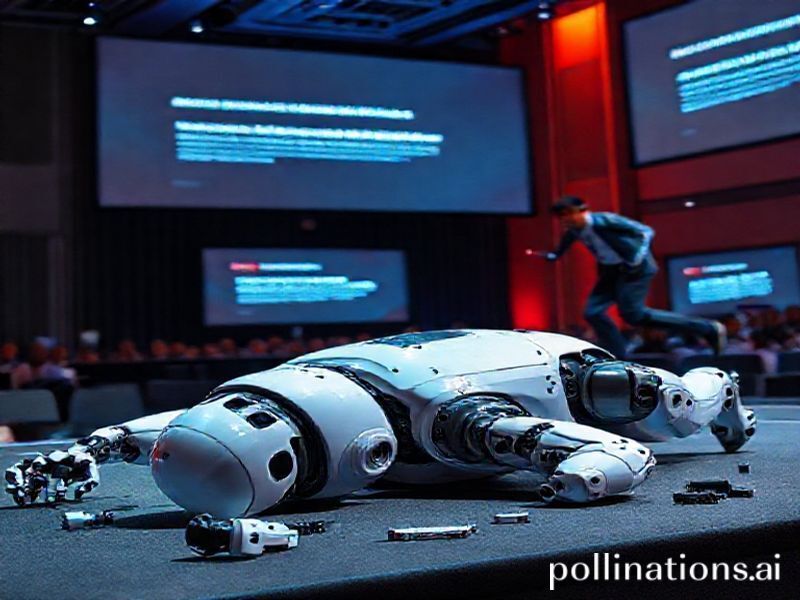

A single misstep on a brightly lit stage in Palo Alto last week did more than make headlines—it cracked open a conversation that the robotics industry has been tiptoeing around for years. When Tesla’s humanoid robot Optimus face-planted during a live demonstration, the gasp from the audience was almost as loud as the thud itself. But beyond the viral clips and late-show punchlines lies a deeper question: Was this a genuine autonomy failure, or evidence that we’re still secretly pulling the strings?

The incident, captured from multiple angles by eager tech journalists, shows Optimus attempting to navigate a simple staircase before losing balance and tumbling forward. What makes this spill significant isn’t the fall itself—robots fall all the time in labs—but the context surrounding it. Tesla had just finished boasting about Optimus’s “full autonomous navigation capabilities” when gravity decided to fact-check their claims in real-time.

The Teleoperation Elephant in the Room

For years, robotics demonstrations have operated under a kind of don’t-ask-don’t-tell policy regarding remote control. While companies trumpet their AI breakthroughs, behind-the-scenes footage often reveals technicians with controllers, ready to intervene when algorithms falter. The Optimus incident has reignited scrutiny over what constitutes true robotic autonomy versus sophisticated puppetry.

Dr. Sarah Chen, a robotics researcher at MIT, explains the challenge: “The gap between controlled demonstration and real-world deployment is enormous. What looks like autonomy in a carefully orchestrated presentation might actually be heavy teleoperation or pre-programmed sequences that can’t adapt to unexpected variables—like a slightly different stair height or lighting condition.”

Decoding the Demonstration Dilemma

The robotics industry faces a unique paradox: investors and the public demand to see progress, but true autonomy is messy, slow, and often underwhelming to watch. This pressure creates incentives for companies to polish their presentations, sometimes blurring the line between current capabilities and future promises.

Red Flags in Robot Reveals

Industry veterans have developed a kind of sixth sense for spotting potentially misleading demonstrations. Here are key indicators that a “autonomous” robot might have hidden help:

- Perfect timing: Movements that seem too synchronized or choreographed

- Environmental control: Demonstrations in overly structured or familiar spaces

- Limited interaction: Robots that avoid spontaneous human engagement

- Camera angles: Strategic filming that might hide external equipment or operators

- Repetitive tasks: Actions that could be pre-programmed rather than adaptively chosen

The Technology Transparency Movement

In response to growing skepticism, some companies are pioneering a new approach: radical transparency. Boston Dynamics, despite their spectacular viral videos, now regularly publishes “failure reels” showing their robots falling, stumbling, and generally making mistakes. This honesty paradoxically builds trust—viewers understand they’re seeing genuine experimentation rather than marketing theater.

“We need to normalize the failure part of innovation,” says Marc Raibert, founder of Boston Dynamics. “Robots falling isn’t a bug in the development process—it is the development process. Every tumble teaches us something new about balance, adaptation, and resilience.”

Building Better Benchmarks

The industry urgently needs standardized metrics for evaluating robotic autonomy. Current measurements often focus on narrow capabilities—how well a robot can grasp objects or navigate obstacles—rather than broader adaptability and learning capacity. Proposed new benchmarks include:

- Novel Environment Navigation: Testing robots in spaces they’ve never encountered

- Adversarial Conditions: Introducing unexpected elements during demonstrations

- Human Interaction Metrics: Measuring genuine responsiveness versus scripted behaviors

- Failure Recovery Protocols: Evaluating how robots adapt when primary plans fail

Investment Implications and Industry Impact

The Optimus incident has sent ripples through the robotics investment community. Venture capitalists who once accepted glossy demonstrations at face value are now demanding more rigorous proof of autonomous capabilities. This shift could fundamentally reshape how robotics startups approach funding rounds and product announcements.

“We’re seeing a new due diligence standard emerge,” explains Jennifer Liu, a partner at RoboVentures Capital. “Investors want to see robots operating in uncontrolled environments, with verified autonomous decision-making. The era of carefully choreographed demos securing massive valuations may be ending.”

The Competitive Landscape Shift

This increased scrutiny is creating opportunities for companies that have been quietly building genuine autonomy. Startups focusing on robust, adaptable AI rather than flashy presentations are finding themselves suddenly competitive with larger, better-funded rivals. The market is beginning to reward substance over spectacle.

Looking Forward: The Path to True Autonomy

Despite the setback, Tesla’s Optimus stumble might ultimately accelerate progress toward genuine robotic autonomy. By highlighting the gap between perception and reality, the incident has created industry-wide pressure to develop more robust, adaptable systems. The next generation of humanoid robots will likely emerge from this controversy with stronger foundations and more honest marketing.

Emerging Technologies to Watch

Several technological developments promise to bridge the autonomy gap:

- Reinforcement Learning at Scale: Training robots through millions of simulated falls and recoveries

- Neuromorphic Computing: Hardware that mimics biological neural networks for real-time adaptation

- Multi-Modal Perception: Integrating vision, touch, and proprioception for better environmental understanding

- Distributed Learning Networks: Robots sharing experiences across a global network to accelerate collective learning

The road to robotic autonomy is paved with tumbles, both literal and metaphorical. Each fall brings valuable data, each failure teaches important lessons, and each stumble—when properly analyzed—moves us closer to machines that can truly navigate our complex world independently. The Optimus face-plant wasn’t just an embarrassing moment; it was a necessary reality check that will ultimately benefit the entire industry.

The future belongs not to the robots that never fall, but to those that learn to get back up—on their own.